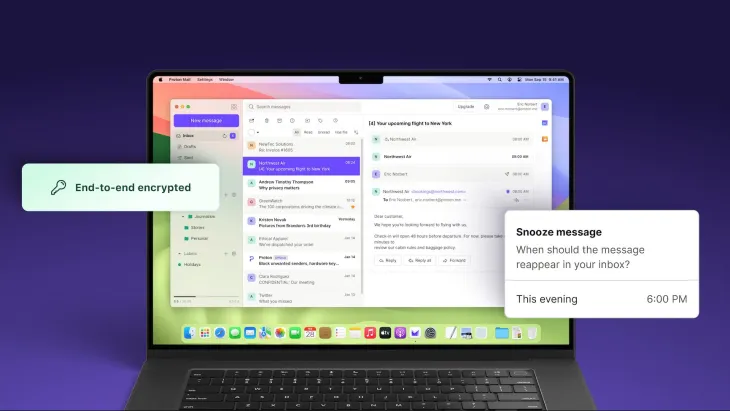

As a part of its continued efforts and ongoing campaign to have generative AI injected into all its products, Microsoft today has introduced Security Copilot, a new tool that is designed to “summarize” and “make sense” of threat intelligence.

In a brief announcement, Microsoft pitched Security Copilot as a way to correlate data on attacks while prioritizing security incidents. Countless tools already do this. However, Microsoft claims that OpenAI’s generative AI models, specifically the recently released text-generating GPT-4, improve Security Copilot, which integrates with its existing security product portfolio.

“Advancing the state of security requires both people and technology — human ingenuity paired with the most advanced tools that help apply human expertise at speed and scale,” Microsoft Security executive vice president Charlie Bell said in a canned statement. “With Security Copilot we are building a future where every defender is empowered with the tools and technologies necessary to make the world a safer place.”

ALso, read: GitHub cuts off over 140 engineering team in India

Microsoft didn’t divulge exactly how Security Copilot incorporates GPT-4, oddly enough. Instead, it emphasized a trained custom model — possibly GPT-4-based — that powers Security Copilot and “incorporates a growing set of security-specific skills” and “deploys skills and queries” relevant to cybersecurity.

Microsoft emphasized that the model was not trained on customer data, which is a common criticism leveled at language model-driven services.

Also, read: Apple adds new emojis, web push notifications and more to latest iOS 16.4

Microsoft claims that this custom model helps “catch what other approaches might miss” by answering security-related questions, advising on the best course of action, and summarizing events and processes. However, given the untrustworthiness of text-generating models, it’s unclear how effective such a model would be in production.

However, it is important to note that Microsoft itself has admitted to the fact that the custom Security Copilot model doesn’t always get everything right. “AI-generated content can contain mistakes, As we continue to learn from these interactions, we are adjusting its responses to create more coherent, relevant and useful answers.” Microsoft wrote.